This article is produced by NetEase Smart Studio (public number smartman 163). Focus on AI and read the next big era!燑/p>

[Netease Smart News August 10 news] As an artificial intelligence researcher, I often think of human fear of artificial intelligence.

However, at present, it is hard for me to imagine how the computer I developed could become the monster of the future world. Sometimes, as Oppenheimer lamented after taking the lead in building the world's first nuclear bomb, I wonder if I would also become a “destroyer of the worldâ€.

I think I will name it in history but it is notorious. As an artificial intelligence scientist, what am I worried about?

Fear of the unknown

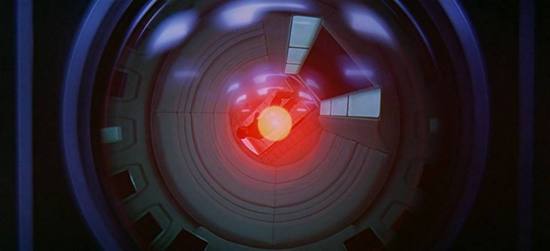

The computer HAL®000, conceived by the science fiction writer Arthur Hot Larke and finally made by the film director Stanley 燢ubrick, is a good example. In many complex systems, such as the Titanic, the NASA space shuttle, and the Chernobyl nuclear power plant, engineers put together many different components. Designers may be very aware of how each individual element works, but when these elements are linked together, they will not be able to understand it, and they will have unintended consequences.

Systems that cannot be fully understood are often uncontrolled and have unintended consequences. The sinking of a steamship, the explosion of the two space shuttles, and the radioactive contamination of Eurasia are a series of catastrophic failures in every disaster.

In my opinion, artificial intelligence currently falls into the same situation. We learn the latest research results from cognitive science and then turn it into a program algorithm and add it to the existing system. We have begun to transform artificial intelligence without knowing what it means.

For example, IBM's Watson and Google's Alpha system, we have combined powerful computer capabilities with the artificial nervous system to help them achieve a series of amazing achievements. If these machines fail, it may happen that Watson does not call "danger" or that Alpha cannot defeat Go Master, but these mistakes are not fatal.

However, as the design of artificial intelligence becomes more complex and computer processors become faster, their skills will also improve. The risk of accidents is also increasing, and our responsibilities are even greater. We often say that "people are not perfect," and the systems we create are certainly flawed.

Fear of abuse

At present, I am applying "neural evolution method" to develop artificial intelligence. I am not very worried about the possible unintended consequences. I create virtual environments and develop digital organisms so that their brains can solve increasingly complex tasks. We will evaluate the performance of these intelligent creatures, and the best performing ones will be selected to produce again and develop the next generation. In this way, after several generations of evolution, these machine organisms have gradually evolved cognitive abilities.

Now, we are setting up some very simple tasks for these machine creatures. For example, they can do simple navigation tasks, make some simple decisions, etc. But soon we will design smart machines that can perform more complicated tasks. Ultimately, we hope to reach the level of human intelligence.

During this process, we will find problems and solve problems. Each generation of machines can better handle the problems that have occurred with previous generations of machines. This helps us reduce the probability of unexpected outcomes when they actually enter the world in the future.

In addition, this "generation to generation" improvement method can also help us to develop the ethical and ethical aspects of artificial intelligence. Ultimately we want to evolve artificial intelligence that is human morality such as trustworthiness and altruism. We can set up a virtual environment that rewards machines that show friendliness, honesty, and empathy. Maybe this is also a way to ensure that we develop more obedient servants or trusted partners. At the same time, you can also reduce the chance of cold killer robots.

Although this "neural evolution" may reduce the possibility of uncontrollable outcomes, it does not prevent abuse. But this is a moral issue, not a scientific one. As a scientist, I have the obligation to tell the truth. I need to report what I found in the experiment. I don't want to say this result. My focus is not to decide what I want but to show the results.

Fear of social rights

Although I am a scientist, I am still alone. To some extent, I must reconnect hope and fear. Regardless of whether it is political or moral, I must think about the impact my work may have on society as a whole.

There is no clear definition of what artificial intelligence is or what it will be for the entire research community or society as a whole. Of course, this may be because we do not yet know its capabilities. But we really need to decide on the expected development results of advanced artificial intelligence.

An important issue that people are concerned about is employment. Robots are currently undergoing some physical labor such as welding automotive parts. In the near future, they may also do some cognitive work that we once thought were human only. Robots for self-driving cars can replace taxi drivers; robots for self-driving aircraft can replace pilots.

In the future, when we need to see a doctor, we do not have to wait in line and wait for a doctor who may be very tired to see us. We can conduct inspections and diagnoses through a system that has acquired all medical knowledge, and immediately complete surgery by a tireless robot. Legal advice may come from an omniscient legal database; investment advice may come from market forecasting systems.

Maybe one day, all human work will be done by machines. Even my own work can be done faster because there are a lot of machines that tirelessly research how to make smarter machines.

In our current society, automation will make more people lose their jobs, those who own machines will become richer and others will become poorer. This is not a scientific issue but a political and socio-economic problem that needs to be solved.

The fear of nightmare scenes

There is also a final fear: if artificial intelligence continues to evolve and eventually surpass human intelligence, will the hyper-intelligent system find it no longer needs humans? When a super-smart creature can accomplish what we can never accomplish, how can we humans define our own position? Can we stop robots created by ourselves from erasing from the earth?

At that time, one of the key questions is: How can we convince super intelligence to stay with them?

I think I would say, I am a good person, I created you. I want to evoke super-compassionate compassion to keep it with me. And I will survive with a compassionate human being. Diversity itself has its value, and the universe is so large that the existence of human beings may not be of any significance at all.

However, it is too difficult for me to represent all mankind and present a unified view on behalf of all mankind. And when I look closely at ourselves. We have a fatal flaw: We are bored with each other. We are fighting each other. We have unequally distributed food, knowledge and medical assistance. We pollute the earth. There are many wonderful things in the world, but those who are wrong or bad make the human existence gradually less important.

Fortunately, we do not need to prove the reasons for our existence. We still have time, about 50 to 250 years, depending on the pace of development of artificial intelligence. As a species, we should unite and come up with a good answer to explain why Super Intelligence should not wipe us out. But this is hard to do: We say that we welcome diversity, but in reality it is not like this, as we say that we want to save the earth creatures, but in the end we cannot do it.

Each of us, individuals and society, needs to be prepared for this kind of nightmare scenario. We use the rest of our time to think about how the super-intelligence created by us proves our human existence is valuable. Or, we can think that this kind of thing will never happen. We don't need to worry about it. However, in addition to the potential for physical danger, ultra-intelligence may also bring political and economical dangers. If we do not find a better method of allocating wealth, artificial intelligence laborers will promote capitalism because only a very few people can have means of production. (Selected from: ROBOTPHOBIA Translation: NetEase Seeing Intelligent Compilation Robot Review: Yang Xu)

Pay attention to NetEase smart public number (smartman163), get the latest report of artificial intelligence industry.

Htn Panel,Positive Transflective Lcd Display Module,Htn Monochrome Lcd Display,Htn 7 Segment Lcd Display

Huangshan Kaichi Technology Co.,Ltd , https://www.kaichitech.com