The author has always felt that it would be an exciting thing to know every code from the application to the framework to the operating system. The previous blog talked about the blocking and non-blocking of sockets. This article will talk about socket close (take tcp as an example and based on the linux-2.6.24 kernel version)

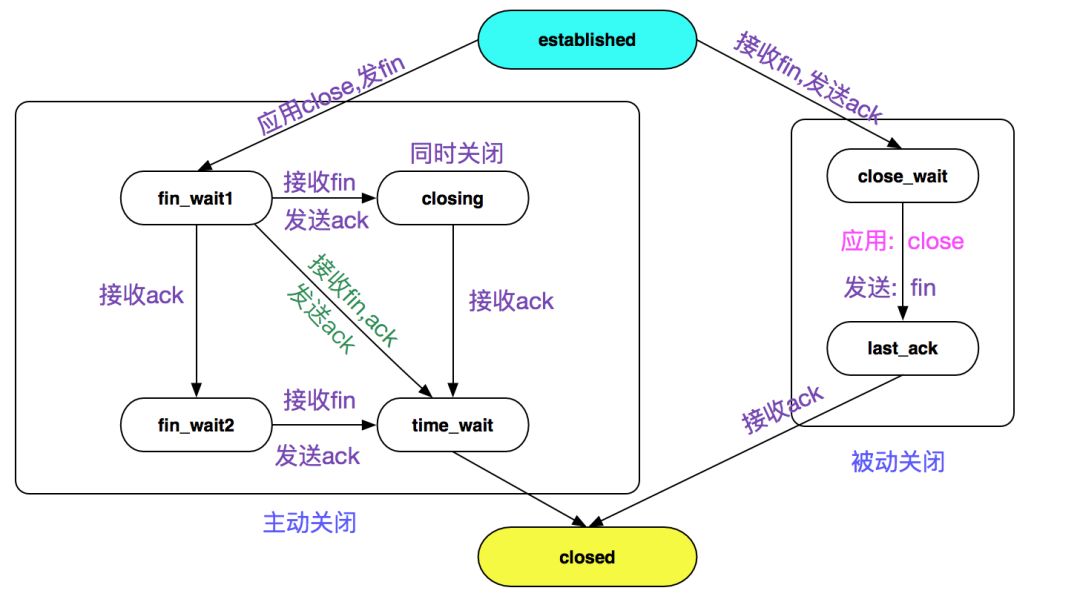

TCP closed state transition diagram

As we all know, the close process of TCP is waved four times, and the transition of the state machine cannot escape the TCP state transition diagram, as shown in the following figure:

The shutdown of tcp is mainly divided into active shutdown, passive shutdown and simultaneous shutdown (special circumstances, no description)

Active shutdown

process of close(fd)

Take C language as an example, when we close the socket, we will use the close(fd) function:

intsocket_fd;

socket_fd = socket(AF_INET,SOCK_STREAM,0);

...

// Here, close the corresponding socket through the file descriptor

close(socket_fd)

And close (int fd) is executed through the system call sys_close:

asmlinkage longsys_close(unsignedintfd)

{

// Clear the bitmap mark (close_on_exec is when exiting the process)

FD_CLR(fd,fdt->close_on_exec);

// Release the file descriptor

// Clear the corresponding bit in fdt->open_fds that is the opened fd bitmap

// Then hang fd into the next available fd for reuse

__put_unused_fd(files,fd);

// Call the close method of file_pointer to really clear

retval = filp_close(filp,files);

}

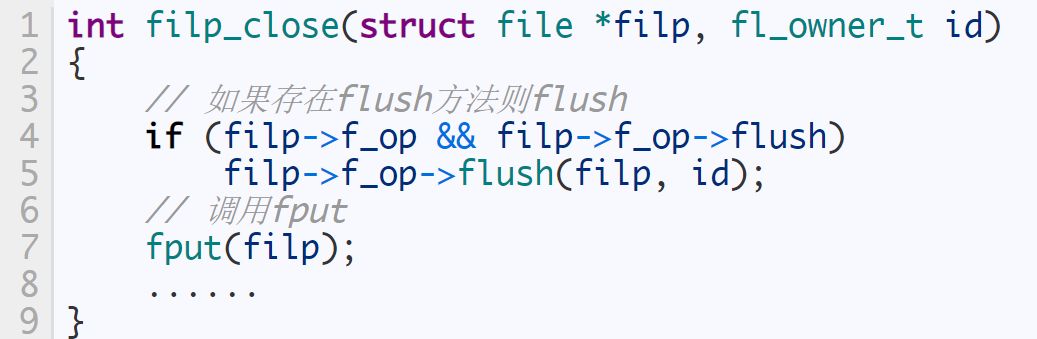

We see that the filp_close method is finally called:

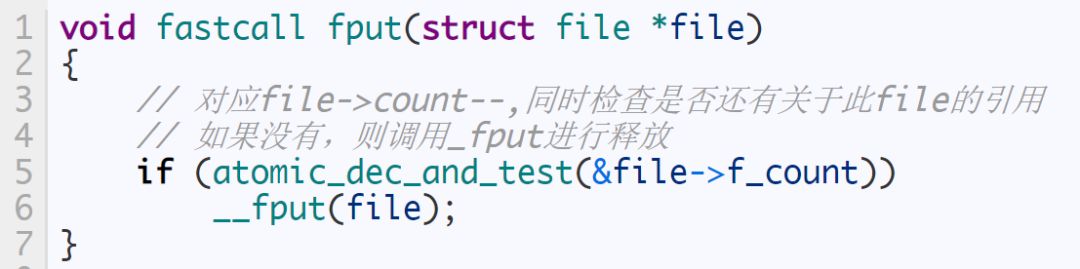

Then we enter fput:

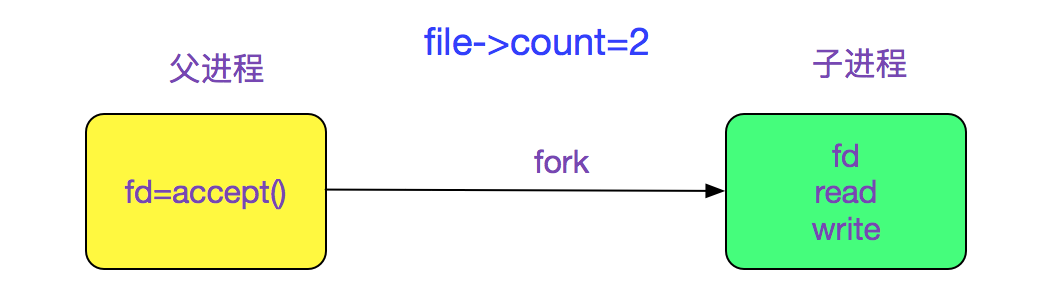

It is very common to have multiple references to the same file (socket), such as the following example:

Therefore, in the process of writing a multi-process socket server, the parent process also needs to close (fd) once, so that the socket cannot be finally closed

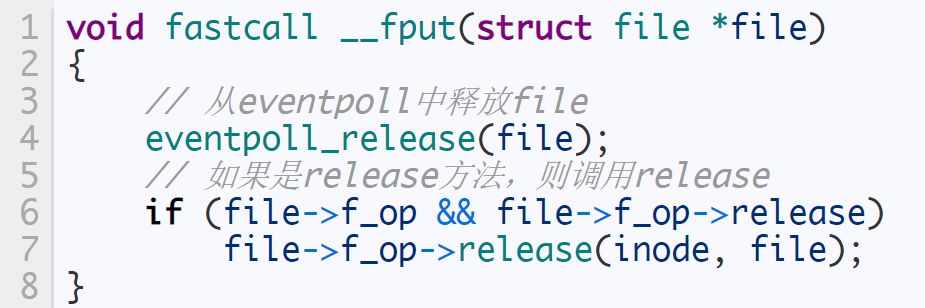

Then there is the _fput function:

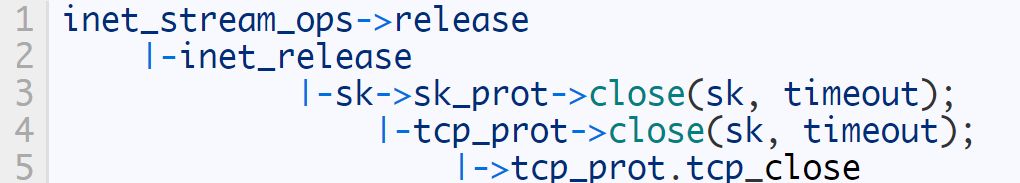

Since we are discussing the close of the socket, we will now explore the implementation of file->f_op->release in the case of socket:

Assignment of f_op->release

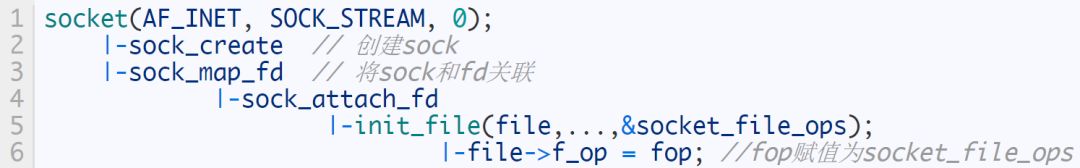

We track the code that creates the socket, namely

The implementation of socket_file_ops is:

staticconststructfile_operations socket_file_ops = {

.owner = THIS_MODULE,

......

// We only consider sock_close here

.release = sock_close,

......

};

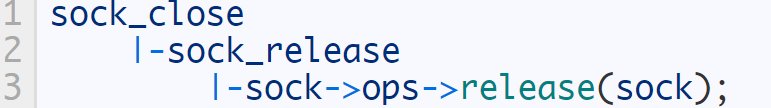

continue following:

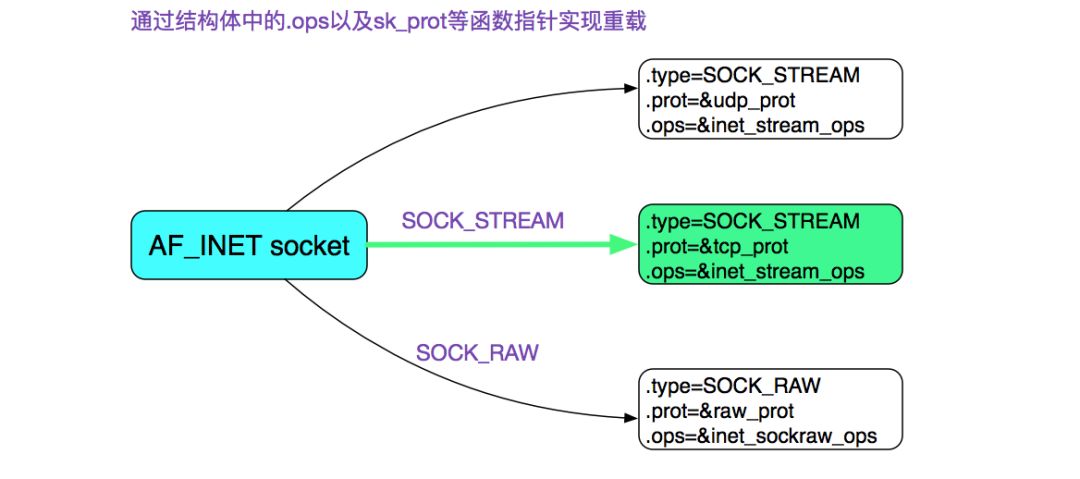

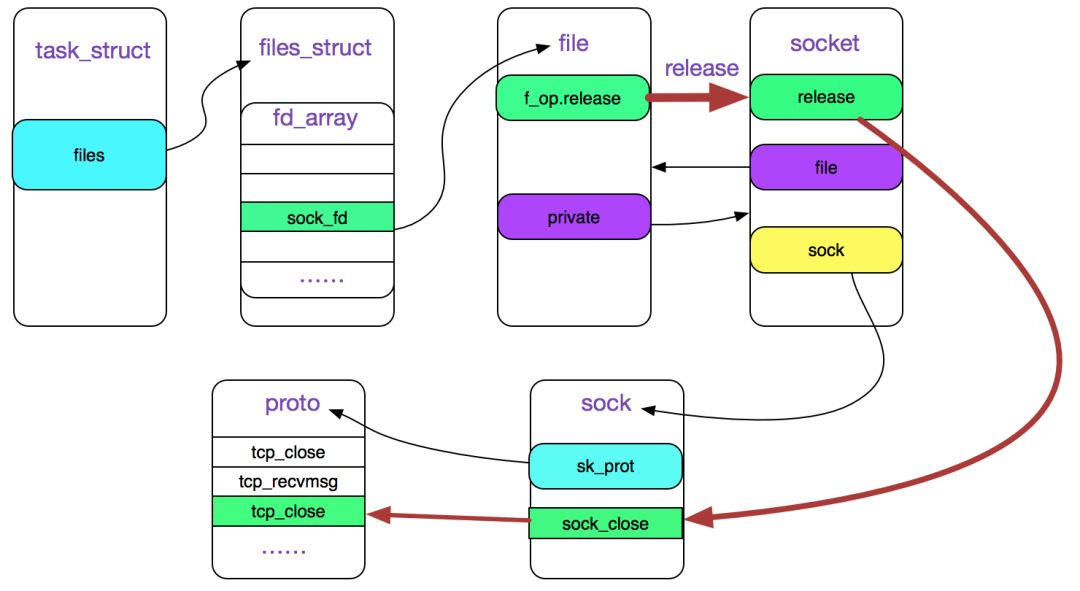

In the previous blog, we know that sock->ops is as shown in the figure below:

That is (here we only consider tcp, that is, sk_prot=tcp_prot):

The relationship between fd and socket is shown in the following figure:

The red line in the figure above is the call chain of close(fd)

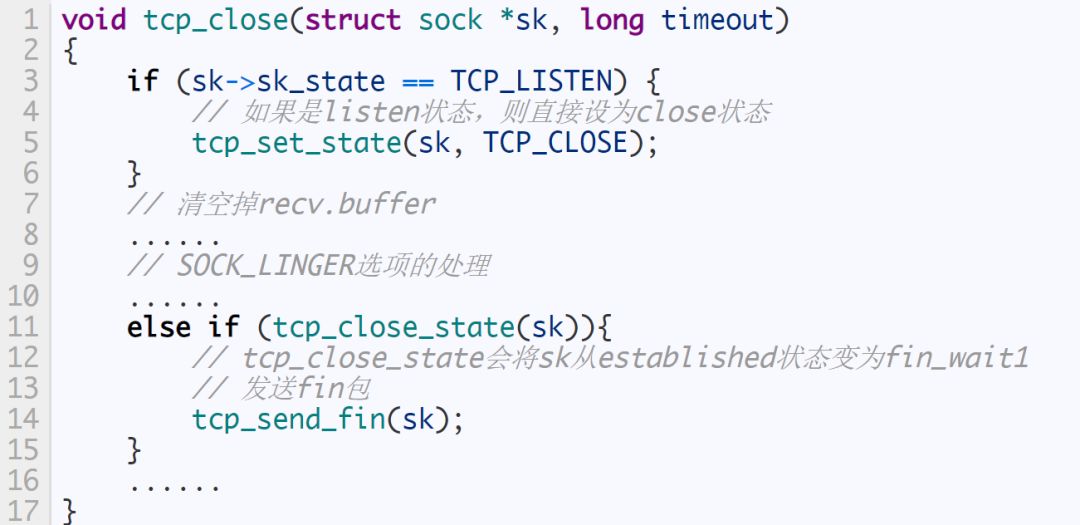

tcp_close

Wave four times

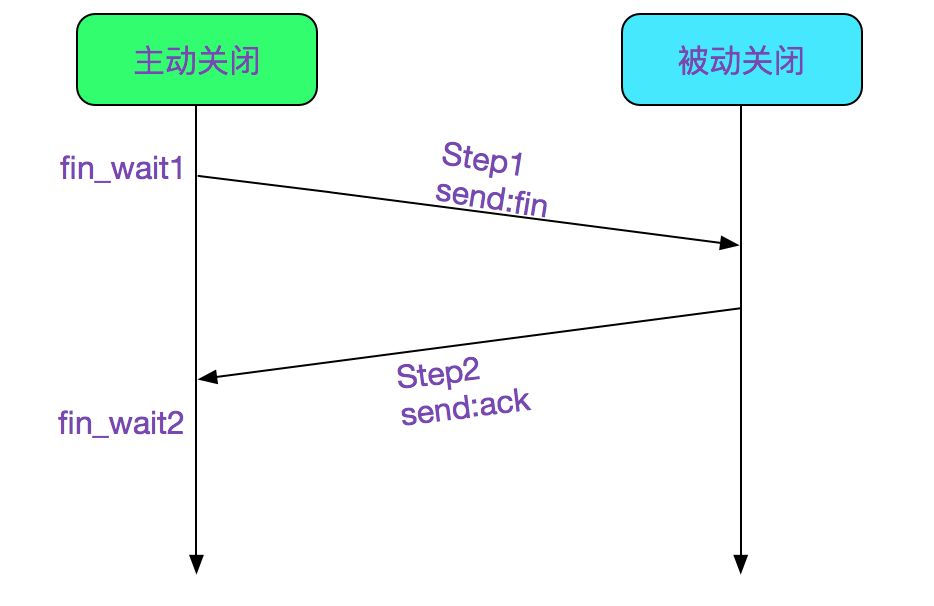

Now is our four wave of hands, of which the two waves in the upper half are shown in the figure below:

First, the state has been set to fin_wait1 in tcp_close_state(sk), and tcp_send_fin is called

voidtcp_send_fin(structsock *sk)

{

......

// Set flags to ack and fin here

TCP_SKB_CB(skb)->flags = (TCPCB_FLAG_ACK | TCPCB_FLAG_FIN);

......

// Send fin package and close nagle at the same time

__tcp_push_pending_frames(sk,mss_now,TCP_NAGLE_OFF);

}

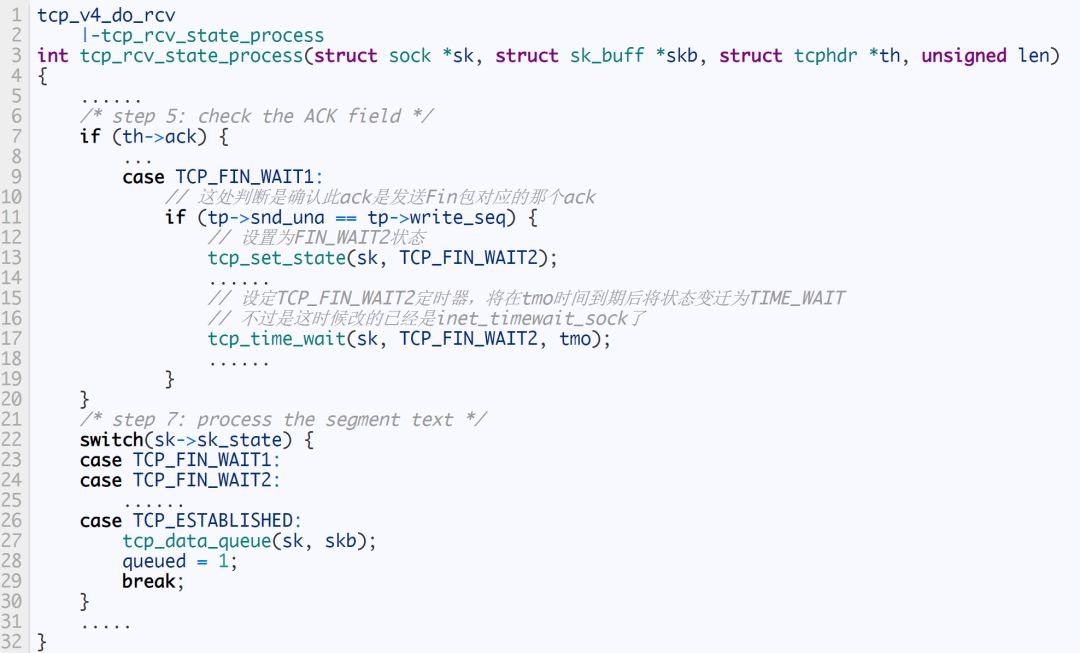

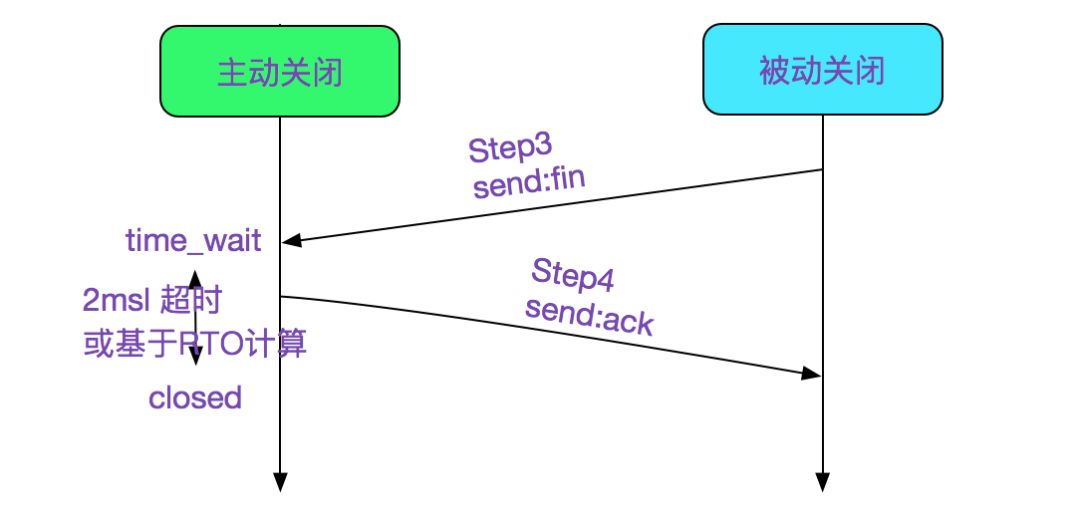

As shown in Step1 above. Then, the actively closed end waits for the ACK from the opposite end. If the ACK comes back, it sets the TCP status to FIN_WAIT2, as shown in Step2 in the above figure. The specific code is as follows:

The value of attention is that after the transition from TCP_FIN_WAIT1 to TCP_FIN_WAIT2, tcp_time_wait is also called to set a TCP_FIN_WAIT2 timer. After tmo+ (2MSL or based on RTO calculation timeout) times out, it will directly change to the closed state (but at this time it is already inet_timewait_sock). This timeout period can be configured. If it is ipv4, you can configure it as follows:

net.ipv4.tcp_fin_timeout

/sbin/sysctl -wnet.ipv4.tcp_fin_timeout=30

As shown below:

The reason for this step is to prevent the opposite end from not sending fin for various reasons, and to prevent it from being in the FIN_WAIT2 state all the time.

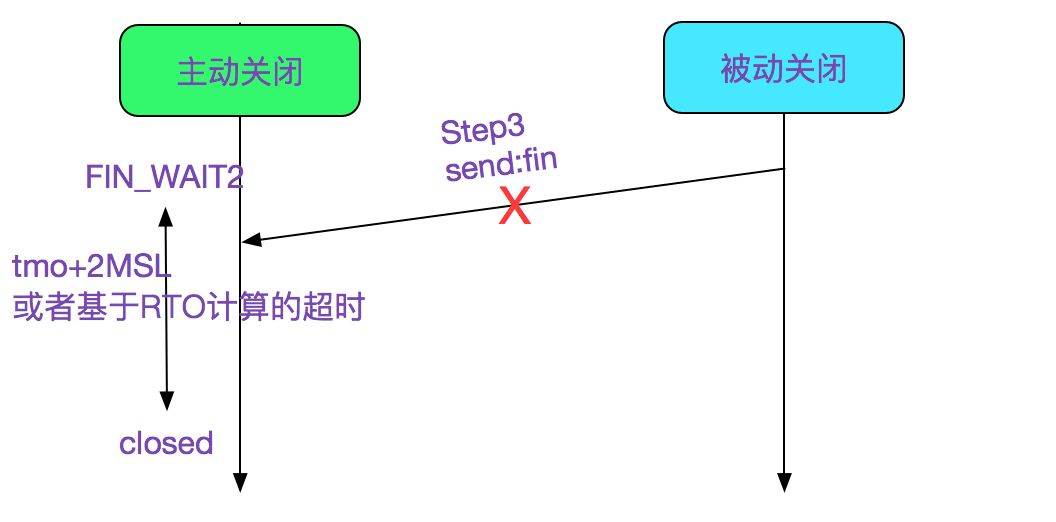

Then wait for the opposite FIN in the FIN_WAIT2 state, and complete the next two waves:

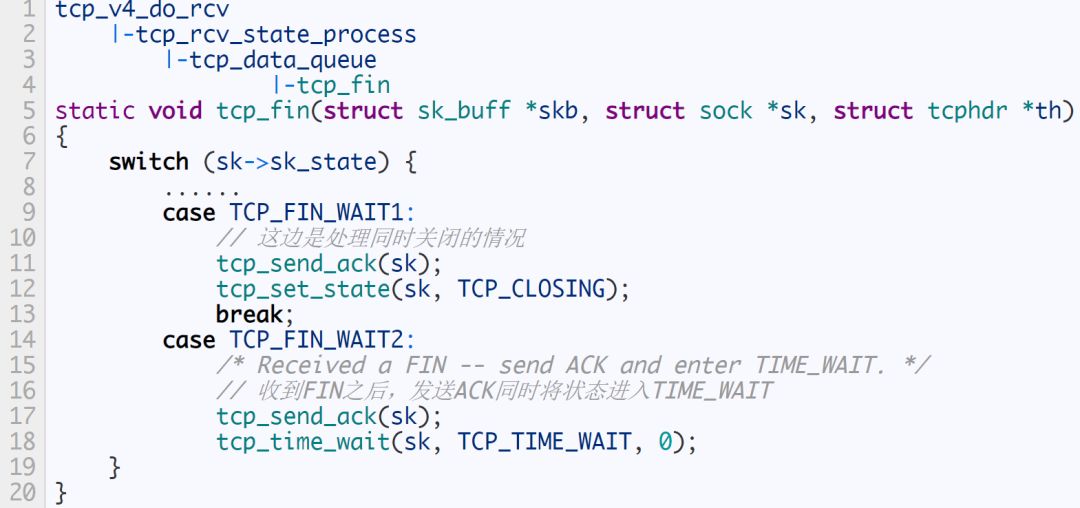

Step1 and Step2 set the state to FIN_WAIT_2, and then after receiving the FIN sent by the peer, the state will be set to time_wait, as shown in the following code:

In the time_wait state, the original socket will be destroyed, and then a new inet_timewait_sock will be created, so that the resources used by the original socket can be recovered in time. And inet_timewait_sock is hung into a bucket, and inet_twdr_twcal_tick periodically deletes instances of time_wait that exceed (2MSL or time based on RTO) from the bucket. Let's look at the tcp_time_wait function

voidtcp_time_wait(structsock *sk,intstate,inttimeo)

{

// Establish inet_timewait_sock

tw = inet_twsk_alloc(sk,state);

// Put it in the specific location of the bucket and wait for the timer to be deleted

inet_twsk_schedule(tw, &tcp_death_row,time,TCP_TIMEWAIT_LEN);

// Set the sk state to TCP_CLOSE, and then recycle the sk resources

tcp_done(sk);

}

The specific timer operation function is inet_twdr_twcal_tick, which will not be described here.

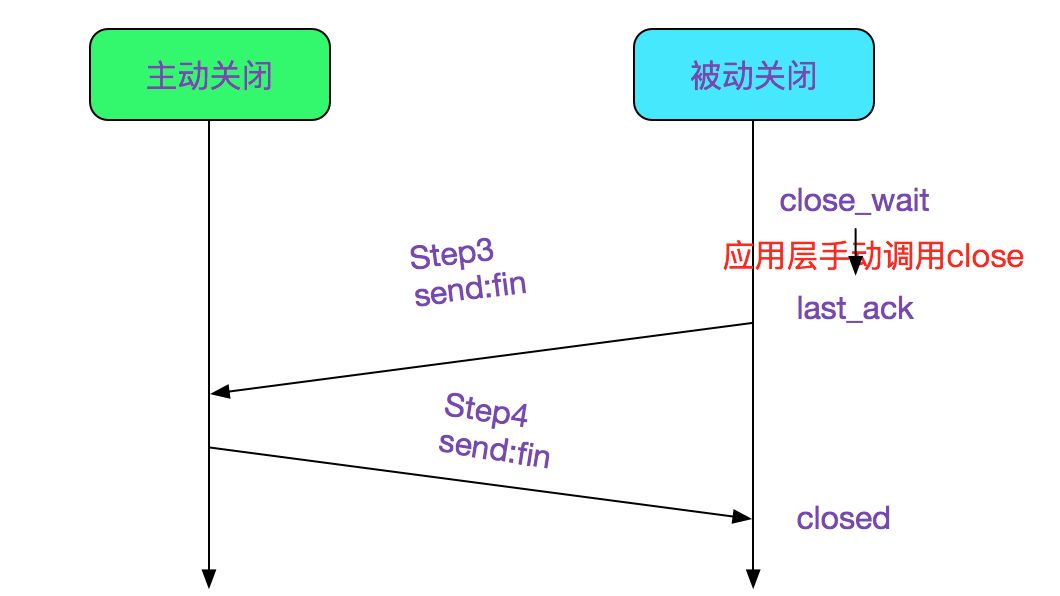

Passive shutdown

close_wait

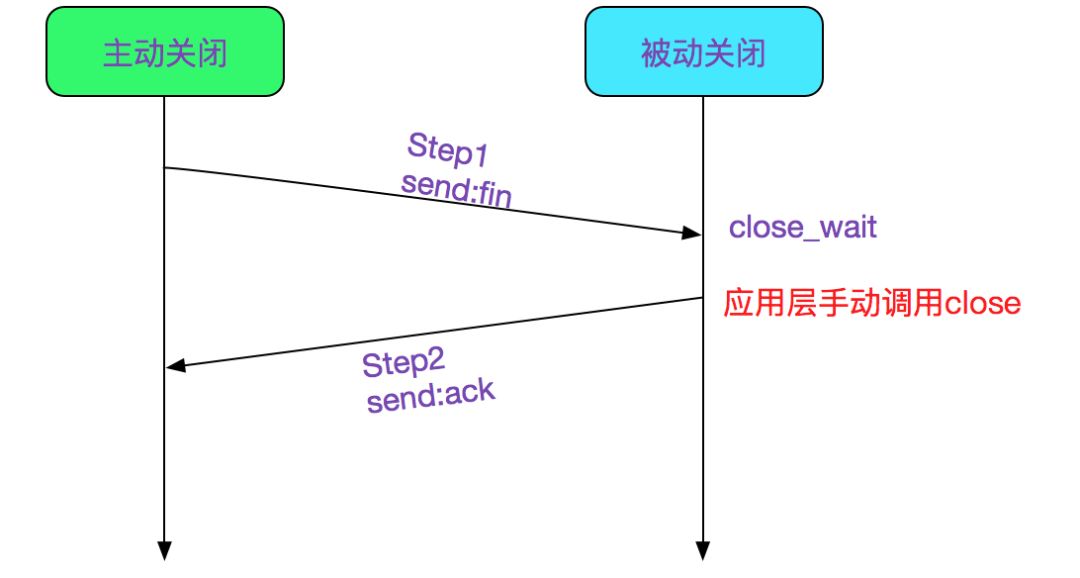

In the tcp socket, if it is in the established state and receives the FIN from the opposite end, it is passively closed and enters the close_wait state, as shown in Step1 in the following figure:

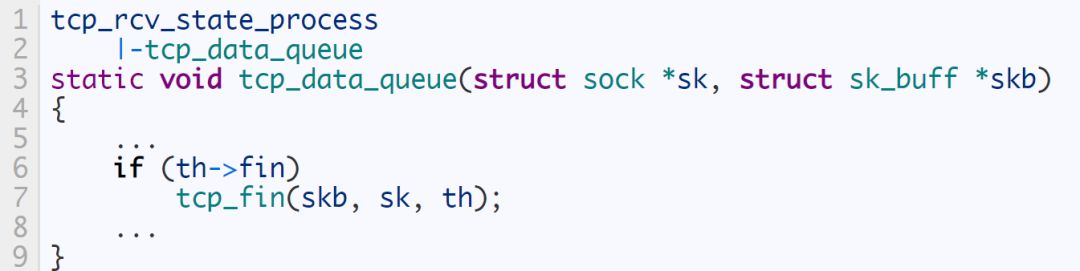

The specific code is as follows:

Let's look at tcp_fin again

The interesting point here is that after receiving the fin from the opposite end, it does not immediately send an ack to inform the opposite end that it has received it, but waits for a piece of data to be sent, or sends an ack after the carrying retransmission timer expires.

If the opposite end is closed, the return value obtained by the application end when reading is 0, then you should manually call close to close the connection

if(recv(sockfd,buf,MAXLINE,0) == 0){

close(sockfd)

}

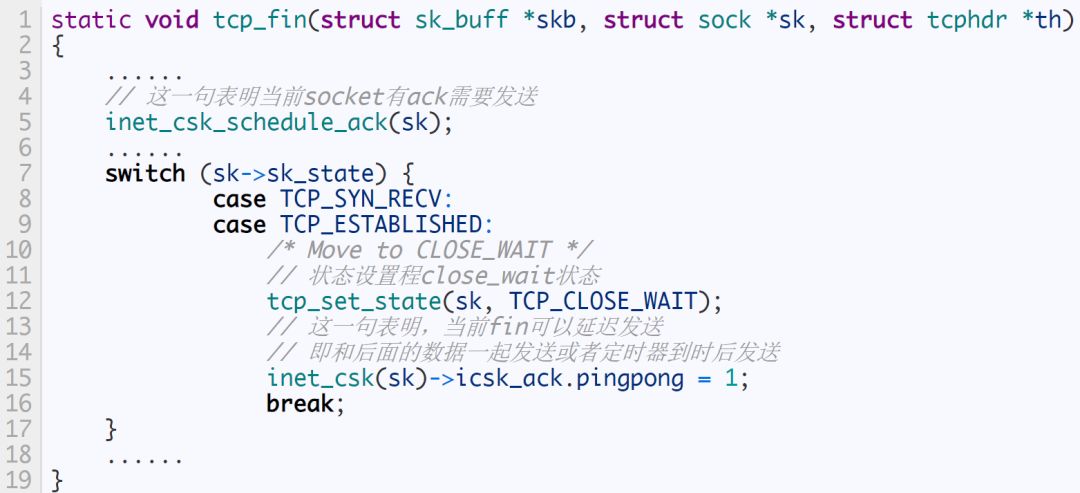

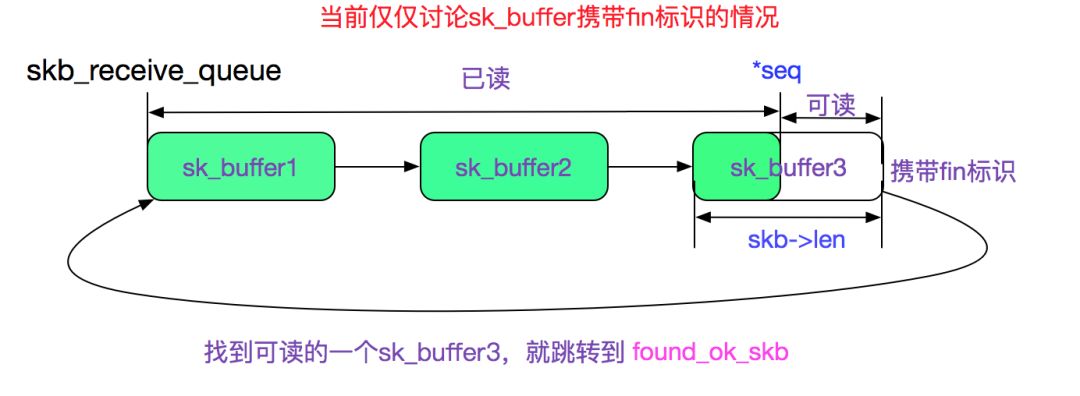

Let's take a look at how recv handles the fin packet, which returns 0. As you can see from the previous blog, recv finally calls tcp_rcvmsg. Due to its complexity, we will look at it in two paragraphs:

tcp_recvmsg first paragraph

The processing process of the above code is shown in the following figure:

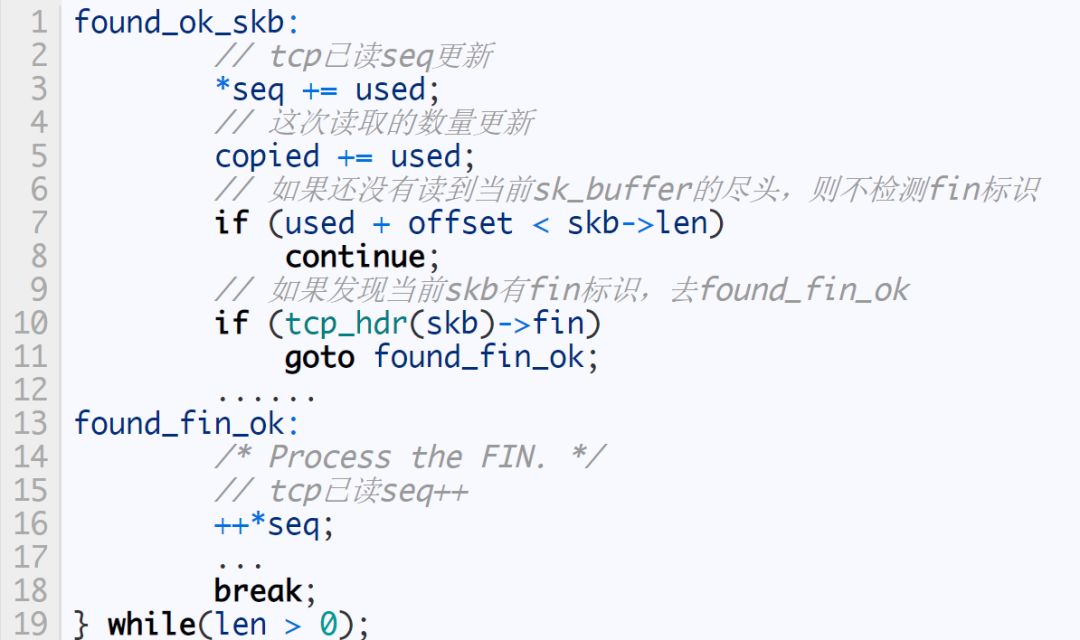

Let's look at the second paragraph of tcp_recmsg:

It can be seen from the above code that once the current skb is read and carries the fin flag, the number of bytes that have been read will be returned regardless of whether the number of bytes expected by the user is read. The next time it is read again, it will jump to found_fin_ok and return 0 without reading any data in the upper half of tcp_rcvmsg just described. In this way, the application can perceive that the opposite end has been closed. As shown below:

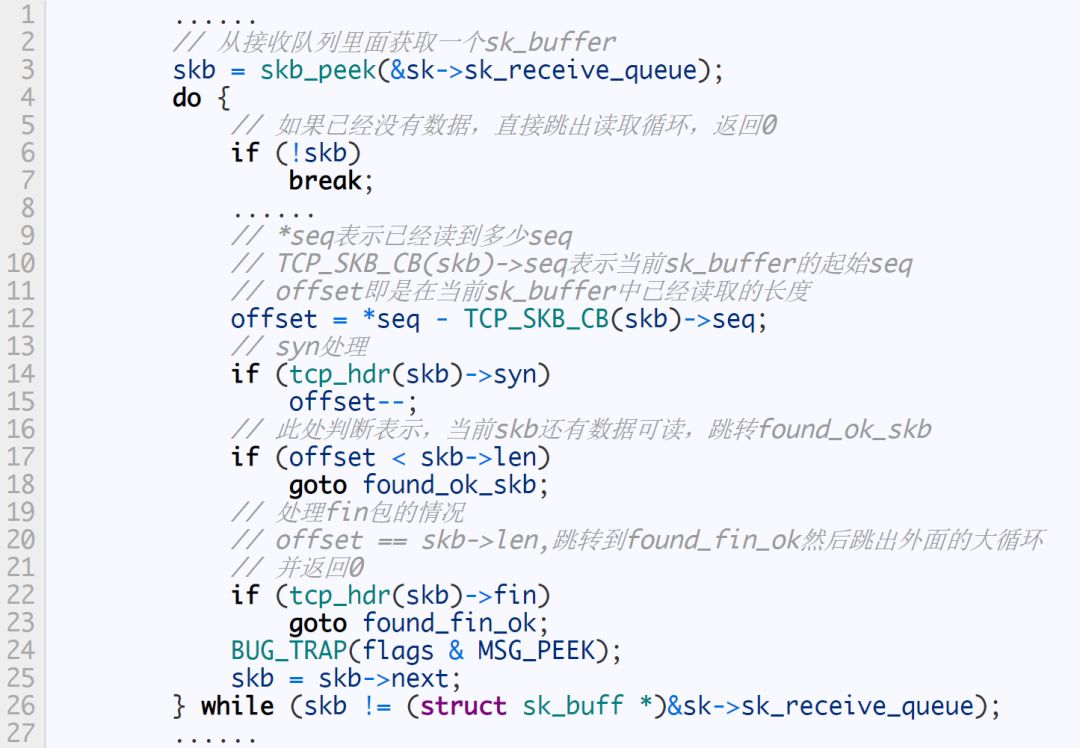

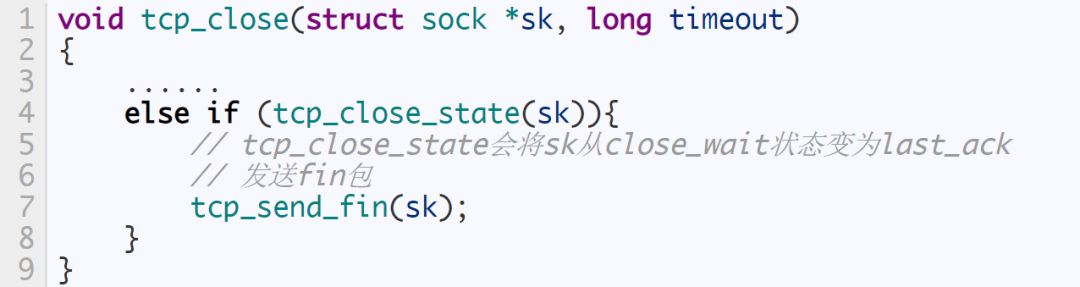

last_ack

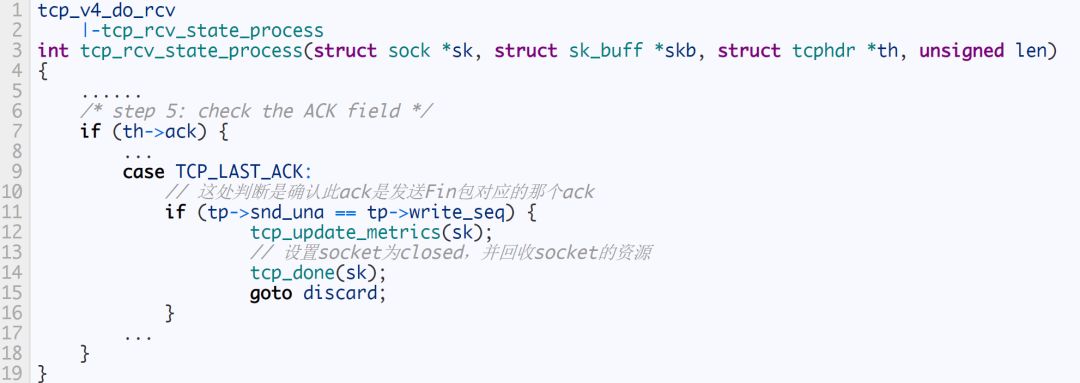

After the application layer finds that the peer is closed, it is already in the close_wait state. If close is called at this time, the state will be changed to the last_ack state, and the local fin will be sent, as shown in the following code:

After receiving the last_ack of the active closing terminal, call tcp_done(sk) to set sk to tcp_closed state, and reclaim the resources of sk, as shown in the following code:

The above code is the two waves after passively closing the end, as shown in the following figure:

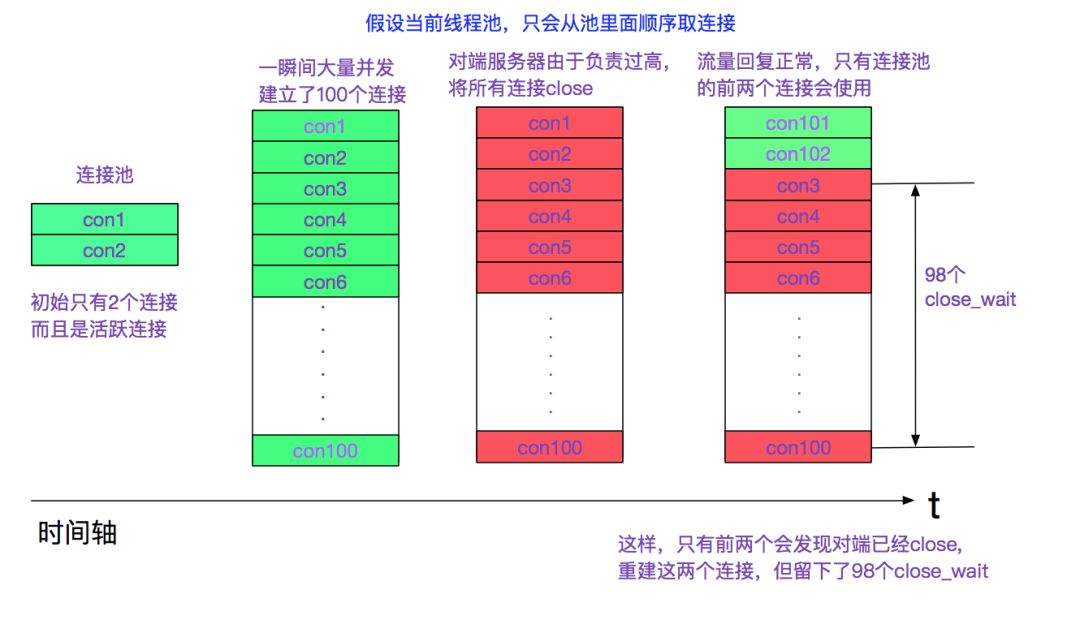

There are a lot of close_wait situations

A large number of close_wait situations in Linux are generally due to the fact that the application did not close the current connection in time when the peer fin was detected. One possibility is as shown in the figure below:

When this happens, it is usually that the configuration of parameters such as minIdle is incorrect (if the connection pool has the function of shrinking the connection regularly). Adding a heartbeat to the connection pool can also solve this problem.

If the application close time is too late, the peer has already destroyed the connection. Then the application sends fin to the opposite end, and the opposite end will send a RST (Reset) message because it cannot find the corresponding connection.

When does the operating system recycle close_wait

If the application hasn't called close_wait for a long time, does the operating system have a recycling mechanism? The answer is yes. TCP itself has a keep alive timer. After the keep alive timer expires, the connection will be closed forcibly. You can set the time of tcp keep alive

/etc/sysctl.conf

net.ipv4.tcp_keepalive_intvl = 75

net.ipv4.tcp_keepalive_probes = 9

net.ipv4.tcp_keepalive_time = 7200

The default value is as shown above, the setting is very large, and it will time out after 7200s. If you want to quickly recover close_wait, you can set a smaller value. But the final solution still has to start with the application.

For the tcp keepalive packet live timer, see another blog of the author:

https://my.oschina.net/alchemystar/blog/833981

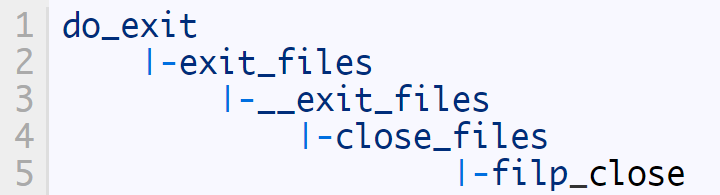

Clean up socket resources when the process is closed

When the process exits (no matter kill, kill -9 or exit normally), all fd (file descriptors) in the current process will be closed

In this way, we are back to the filp_close function at the beginning of the blog, and send send_fin to each fd that is a socket.

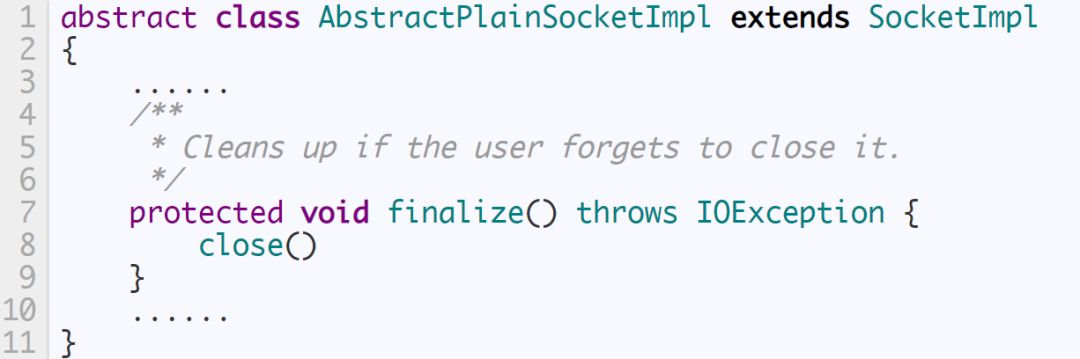

Clean up socket resources during Java GC

Java's socket is finally associated with AbstractPlainSocketImpl, and it rewrites the finalize method of object

So Java will close unreferenced sockets at the GC moment, but remember not to rely on Java's GC, because the GC moment is not judged by the number of unreferenced sockets, so it is possible to leak a bunch of sockets, but still No GC is triggered.

to sum up

The linux kernel source code is extensive and profound, and reading the code is very troublesome. When I read "TCP/IP Detailed Explanation Volume 2" before, because of the advanced guidance and sorting, the BSD source code used in the book did not feel very difficult. Until now, when I look at the Linux source code independently with a question, I am still confused by the various details despite the previous foundation. I hope this article can help people who read the Linux network protocol stack code.

We have experience and skill to support customers to tooling for their required waterproof connectors, like IP68 series,micro fit connectors. Etop wire assemblies for various industries have been highly recognized by all the customers and widely used for automobiles, electrical and mechanical, medical industry and electrical equipemnts, etc. Products like, wire harness for car audio, power seat, rear-view mirror, POS ATM, Diesel valve Cover gasket fit, elevator, game machine, medical equipment, computer, etc.

JST Connector,Molex Connector, Multi-Contact Connector, Micro Fit Connectors

ETOP WIREHARNESS LIMITED , https://www.wireharnessetop.com