Mobile phones can be said to be the most inseparable electronic devices in daily life. Since its birth, it has developed from analog to digital, from 1G to the current 4G and the near future 5G, from the initial only function (calling) to the current full function, from the feature phone (feature phone) ) The development of smart phones (smart phones) can be described as a huge change. For audio on mobile phones, there was only voice communication at the beginning, and now not only voice communication, but also listening to music, recording, intelligent voice (voice input/voice interaction), etc. There are many audio scenes in smart phones, which can be said to be the most complex subsystem in the mobile multimedia system. Today we will talk about audio on Android smartphones.

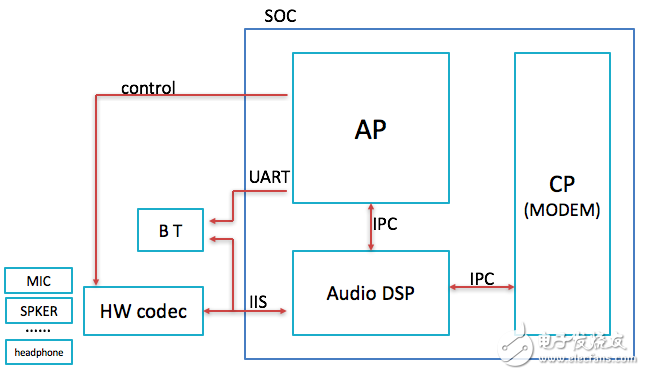

Let's start with the hardware. The following figure is the current mainstream audio-related hardware block diagram in android smart phones.

In the above figure, AP is an application processor (applicaTIon processor), on which the operating system (OS, such as android) and applications are mainly running. CP is a communication processor (communica TIon processor), also called a baseband processor (BP) or modem. The main processing above is related to communication, such as whether the signal of a mobile phone is good or not. Audio DSP, as the name suggests, is a DSP that processes audio. When I first made a mobile phone, I was puzzled that the AP processor frequency is so high now, the audio processing (especially voice) CPU load is not high, and the AP can handle it, so why add an additional audio DSP to process the audio? Didn’t it increase the cost? With the deepening of the work, I learned that this is mainly due to power consumption considerations. Power consumption is an important indicator on a mobile phone, and it is also a must for certification. It determines the battery life of the mobile phone. Now that mobile phone battery technology has not achieved a breakthrough, if you want to have a strong battery life, you must reduce power consumption. Calling and listening to music in audio are one of the most important functions on mobile phones, and power consumption must be reduced in these two scenarios. How to drop it? It is to add a DSP that specializes in audio processing, so that the AP is in sleep state most of the time when making calls and listening to music, so that power consumption is reduced. The AP, CP, and audio DSP communicate through IPC (inter-processor communicaTIon) to exchange control messages and audio data. Usually AP, CP and audio DSP (of course, other functional processors) are integrated in a chip to form a SOC (system on chip, system on chip). In addition, there is a hardware codec chip mainly used for audio acquisition and playback, which is controlled by AP (enable and select different audio paths, mainly configuration registers), and exchange audio data with audio DSP through I2S bus. Connected to the hardware codec are various peripherals, including MIC (currently the mainstream is a dual MIC solution), earpiece (handset), speaker (speaker), wired headset (three-stage four-stage two types, three-stage without MIC Function, four-stage type) etc. But the Bluetooth headset is special, it is directly connected to the audio DSP through the I2S bus, mainly because the audio collection and playback are in the Bluetooth chip. When listening to music with a Bluetooth headset, the audio stream is decoded into PCM data on the AP and sent directly to the Bluetooth headset to play through the UART using the A2DP protocol, instead of being sent to the Bluetooth headset to play through the IIS bus through the audio DSP.

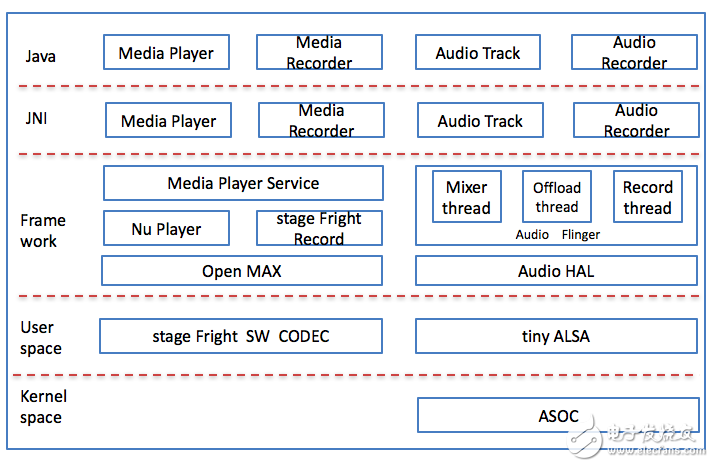

Let's look at the software again. Audio-related software is available on the three processors (AP/CP/audio DSP). First look at the audio software on the AP. This article is about audio on Android smart phones. Of course, the Android system is running, and the Android system runs on the AP. There is mulTImedia framework in Android, audio is part of it, and the software block diagram of audio part on AP is as follows:

Android audio software is divided into different layers, mainly kernel/user/Framework/JNI/Java. Speak up from the bottom kernel. The core of Android is Linux. The audio-related subsystem on Linux is called ALSA, which includes two parts: kernel space and user space. The kernel space is the audio driver, and the user space mainly provides APIs for application calls. The audio driver of Android is the same as that of Linux. The ALSA is tailored in the user space to form tinyalsa. I briefly described these in the previous article (audio collection and playback), and you can check it out if you are interested. At the same time, there are various libraries of audio software codec in user space, including music (MP3/AAC, etc.) and voice (AMR-NB/AMR-WB, etc.). The next step is the Framework. There are many modules inside, mainly NuPlayer/stageFright Record/openMAX/AudioFlinger, etc. There are too many articles on the audio Framework on the Internet, so I won't go into details here. Auido HAL (Hardware Adapter Layer) is also on this layer. The upper layer of the Framework is JNI (Java Native Interface), which provides interfaces for Java to call. The main interfaces provided are MediaRecorder/MediaPlayer/AudioTrack/AudioRecorder. The top layer is the Java layer, which is a variety of apps with audio functions (calling the provided API).

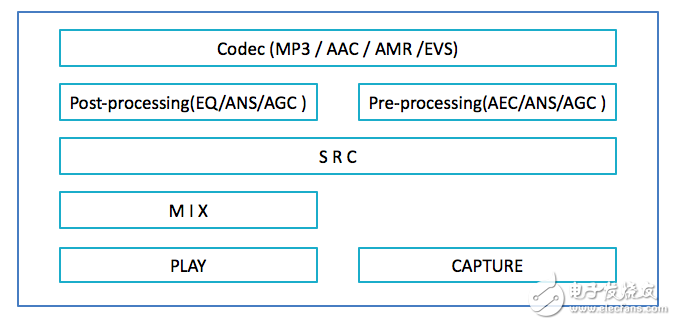

Look at the audio software on the audio DSP. The following figure is the software block diagram of audio:

As can be seen from the figure above, the modules mainly include codec (MP3/AAC/AMR/EVS, etc.), pre-processing (AGC/ANS/AGC, etc.), post-processing (EQ/AGC/ANS, etc.), resampling (SRC), mixed Audio (MIX), audio data collected from DMA (CAPTURE), audio data sent to DMA and played (PLAY), etc., of course, there are audio data processing received and sent to other processors, etc. Both AP and CP To interact with audio DSP voice data.

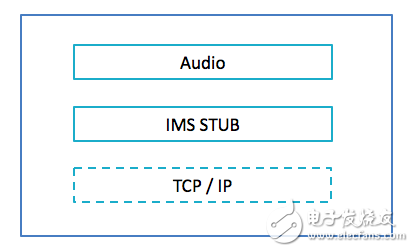

Finally, look at the audio software on the CP. What it deals with is related to voice communication. 2/3G is completely different from 4G (2/3G is a CS call, there will be a dedicated channel for voice processing. 4G is a pure IP network, it is a PS call), there will be two sets of processing mechanisms. I have never done audio processing on CP (I mainly do audio processing on audio DSP, and occasionally do some audio processing on AP). I am not familiar with voice processing under 2/3G, and understand voice processing under 4G. The following figure is a block diagram of audio software processing under 4G:

It is mainly divided into two parts, IMS stub, which deals with voice data related to IMS (IP Multimedia Subsystem) (IMS control protocol related is processed in AP). Audio, pack/unpack voice data in IMS, and interact with audio DSP.

There are many audio scenarios in smart phones. In some scenarios, the audio software in the three processors will be involved, such as making a call. The AP mainly processes some control logic. CP and audio DSP not only have control logic, but also voice data. In the upstream, the voice collected from the MIC is processed by the Audio DSP and then turned into a stream and sent to the CP. After the CP is processed, it is sent to the network through the air interface. The downstream is the CP takes the voice stream from the air interface and sends it to the audio DSP after processing. , The audio DSP decodes and sends it to the codec chip until the peripheral plays it out. Some scenes only involve the audio software in part of the processor. For example, the audio software on the CP will not be involved when playing music. When playing music with the APP, the music is uploaded from the AP to the audio DSP. Set to play out. In the next article, I will describe in detail the flow of audio data in various audio scenarios.

Flat Key (No embossed) Membrane Switch

Working Voltage

≤50VDC

Working Current

≤100mA

Contact Resistance

0.5~10Ω

Insulation Resistance

≥100MΩ

The Base Pressure

2kDVC

Recoil Time

≤6ms

loop resistance

50Ω,150Ω,350Ω or custom

Insulating ink withstands pressure

100VDC

Reliability life

>1000k times

Closing displacement

0.1~0.4mm (no touch) 0.4~1.0mm

Health standard for force

15 ~ 750 g

Migration of silver paste

In 55 ℃, the temperature is 90%, 56 hours later,

the second line is between 10 m Ω / 50 VDC

Silver pulp line

No oxidation, no impurities

Silver pulp line width

≥0.3mm

Line burrs

<1/3

circuit nick

< 1/4 line width

Standard pin spacing

2.54 2.50 1.27 1.25mm

Lead resistance to curvature

With d=10mm steel, the bar is pressed 80 times continuously

operating temperature

-20℃~+70℃

Storage Temperature

-40℃ ~ +85℃ temperature 95%±5%

barometric pressure

86~106KPa

Print size deviation

±0.10mm,The edge line of the appearance is unclear and the difference is ±0.1mm is unclear and the difference is ±0.1mm

Color deviation

±0.11mm/100mm

The insulating ink is fully covered with the slurry line

No ink loose, no incomplete writing

Chromatic aberration is not greater than two lever

No creases, no paint removal

Transparent window

Clean, uniform color, no scratch,pinhole, impurities

China Membrane Switch, Membrane Switch Manufacturer, Membrane Switch, Flat Key Membrane Switch, Membrane Keypad, Membrane Keyboard

KEDA MEMBRANE TECHNOLOGY CO., LTD , https://www.kedamembrane.com