Obtaining market approval for medical devices is a difficult task, and manufacturers must focus on challenges beyond the purely technical nature and concentrate on cultivating the environment and culture required for the development of software-based medical devices. Specifically, ten important premises for the construction and approval of medical equipment should be considered, but these premises are often overlooked.

1. Safety culture

Companies that lack a widespread safety culture are unlikely to produce safe medical products. A safety culture is not only a culture that allows engineers to ask questions about safety, but also a culture that encourages them to consider every decision from a safety perspective. A programmer may have the problem: "I can use A technology or B technology to write this information exchange, but I am not sure how to balance the better performance of A and the higher reliability of B", and know who I should contact To discuss this decision. And we must cultivate this culture to encourage programmers to think about such problems.

2. Experts

We need experts. Defining what a safety system must do and confirming that it meets safety requirements requires special training and experience. The safety system must be simple. Designing a simple system is the biggest challenge for any engineer.

In the final analysis, experts in related fields (including industry experts, system architects, software designers, process experts, programmers, and verification experts, etc.) are required to determine the requirements, select the appropriate design model, and establish and verify the system.

Such expertise is expensive because it comes from experience rather than classrooms: university computer engineering undergraduate courses rarely involve embedded software development, and courses that teach how to create sufficiently reliable embedded systems are rare.

Sufficient reliability:

1) No system is absolutely reliable, we must understand how to make the system achieve sufficient reliability.

2) Accepting sufficient reliability can reduce development costs and provide us with a way to verify safety indicators.

3) If we don't understand what is reliable enough, we may design a complex system, which is full of failures and easy to collapse.

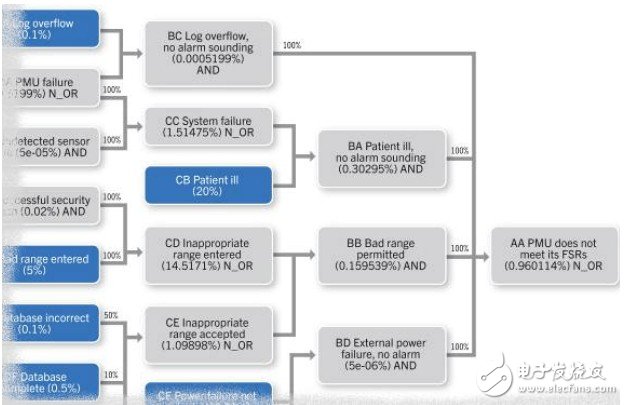

Since the mid-1990s, software design patterns and technologies have made great progress, but many designers have not been exposed to these changes. Figure 1 shows the chart details of the probability of failure per hour for the reference design of medical monitoring equipment. To find out the risk and accurately calculate the probability of failure often requires deep professional knowledge.

Figure 1 Chart of probability of failure per hour for reference design of medical monitoring equipment

3. Process

IEC 62304 focuses on processes. Without good processes, we cannot prove that the system meets its safety requirements.

For some content that is currently difficult to measure, a good process is a measurable relevant factor. It is easier to measure whether a process is followed; it is much more difficult to assess the quality of the design and code. Although it cannot be said that a good process guarantees a good product, it is a well-known ten facts that a good product cannot originate from a poor process.

IEC 62304 lists the processes required to develop medical equipment, not because these processes ensure safe products, but because:

A. They provide an environment where development parameters can be evaluated. For example, a good test process helps statistics of test coverage. Without this process, it is impossible to make any claims about test coverage.

B. They can provide a framework to preserve the chain of evidence for security cases. It is possible to generate safety cases retrospectively, but it is expensive, and it is necessary to regenerate the unreserved evidence that existed in the project development process.

4. Clear requirements

The safety index must clarify the degree of reliability and the constraints to achieve these levels.

FDA has recognized that "showing the rationality of indirect process data for design and production routines" is not enough to indicate the safety of software, and "equipment assurance measures that focus on the safety of specific products and equipment" are also essential. This display is included in the safety case and also reflects the above thesis that the purpose of quality processes is not to guarantee quality products, but to provide an environment for evaluating evidence.

Each safety case will mainly make a statement like "This system will operate A at the level of reliability B under condition C. If A cannot be done, it will move to a design safe state with probability P" . This statement and its corresponding precautions will be listed in the system safety manual for use in the higher-level safety cases of the system.

The reliability of a system refers to its ability to continuously and accurately respond to various situations in a timely manner: it is a combination of availability (the frequency of timely response to requests) and reliability (the correct rate of these responses).

The safety case declares the reliability index of the system and provides evidence of compliance. The limitations of reliability indicators are as important as the indicators themselves. For example, a medical imaging system can meet the requirements of IEC 61508 SIL3, achieve continuous work of no more than 8 hours, and the system must be reset (updated) after 8 hours. Since the imaging process is usually short-lived, this limitation does not cause inconvenience, even if the system takes 24 hours a day.

5. System failure

No system is immune to vulnerabilities, especially Heisenbugs—the mysterious vulnerabilities that are “short-lived†and “disappearing†when we look for them; failures will eventually occur: the system we want to build must be able to restore normalcy or Enter its design safety state.

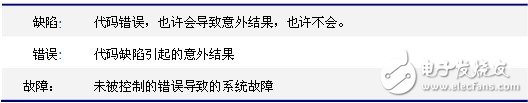

Table 1 Defect, error and failure analysis table

Since all systems will contain defects, and defects may cause failures, a security system must include multiple lines of defense:

The independence of safety-critical processes-to find out which components are safety-critical, it must be designed to ensure that they are not affected by other components.

Prevent defects from becoming errors-Although the ideal solution is to identify and eliminate code failures, it is actually very difficult to do. Be careful of Heisenbug, and ensure that the design of the software can find and close defects, so as not to evolve them into errors.

Prevent errors from turning into failures-compared to software, technologies such as replication and diversification are more suitable for hardware, but careful use can still work.

Failure detection and recovery-In many systems, it is feasible to move to a pre-defined design security state and leave the recovery task to a higher-level system (such as a human). Some systems cannot do this, so the system must be restored or restarted. Generally speaking, if you try to recover in an ambiguous environment, it is better to choose the crash-only mode with quick recovery.

Ningbo Autrends International Trade Co.,Ltd. , https://www.mosvapor.com